Search

Democracy Links

Member's Off-site Blogs

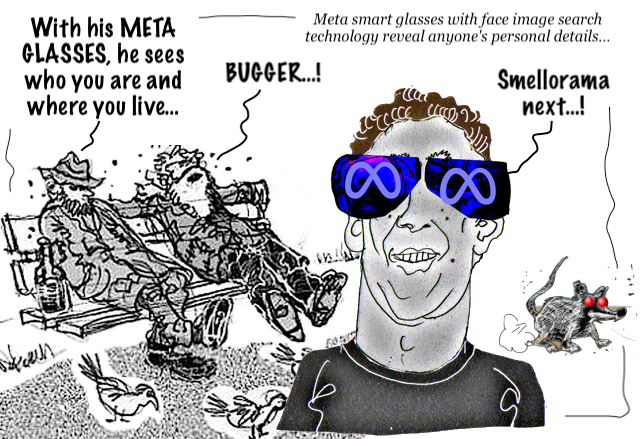

modern highwayman, pickpocketeer and tech bandit.....

Two Harvard students recently revealed that it's possible to combine Meta smart glasses with face image search technology to "reveal anyone's personal details," including their name, address, and phone number, "just from looking at them."

In a Google document, AnhPhu Nguyen and Caine Ardayfio explained how they linked a pair of Meta Ray Bans 2 to an invasive face search engine called PimEyes to help identify strangers by cross-searching their information on various people-search databases.

Meta smart glasses can be used to dox anyone in seconds, study finds

They then used a large language model (LLM) to rapidly combine all that data, making it possible to dox someone in a glance or surface information to scam someone in seconds—or other nefarious uses, such as "some dude could just find some girl’s home address on the train and just follow them home,” Nguyen told 404 Media.

This is all possible thanks to recent progress with LLMs, the students said.

"This synergy between LLMs and reverse face search allows for fully automatic and comprehensive data extraction that was previously not possible with traditional methods alone," their Google document said.

Where previously someone could spend substantial time conducting their own search of public databases to find information based on someone's image alone, their dystopian smart glasses do that job in a few seconds, their demo video said.

The co-creators said that they altered a pair of Meta Ray Bans 2 to create I-XRAY to raise awareness of "significant privacy concerns" online as technology rapidly advances.

Meta Ray Bans “creepiest way” to test techThey said that they chose Meta Ray Bans 2 for their project because the smart glasses "look almost indistinguishable from regular glasses." Nguyen told 404 Media that using Meta smart glasses was "the creepiest way" they could think of for a bad actor to try to scan faces undetected.

To prevent anyone from being doxxed, the co-creators are not releasing the code, Nguyen said on social media site X. They did, however, outline how their disturbing tech works and how shocked random strangers used as test subjects were to discover how easily identifiable they are just from accessing with the smart glasses information posted publicly online.

Nguyen and Ardayfio tested out their technology at a subway station "on unsuspecting people in the real world," 404 Media noted. To demonstrate how the tech could be abused to trick people, the students even claimed to know some of the test subjects, seemingly using information gleaned from the glasses to make resonant references and fake an acquaintance.

Dozens of test subjects were identified, the students claimed, although some results have been contested, 404 Media reported. To keep their face-scanning under the radar, the students covered up a light that automatically comes on when the Meta Ray Bans 2 are recording, Ardayfio said on X.

Opt out of PimEyes now, students warnFor Nguyen and Ardayfio, the point of the project was to persuade people to opt out of invasive search engines to protect their privacy online. An attempt to use I-XRAY to identify 404 Media reporter Joseph Cox, for example, didn't work because he'd opted out of PimEyes.

But while privacy is clearly important to the students and their demo video strove to remove identifying information, at least one test subject was "easily" identified anyway, 404 Media reported. That test subject couldn't be reached for comment, 404 Media reported.

So far, neither Facebook nor Google has chosen to release similar technologies that they developed linking smart glasses to face search engines, The New York Times reported.

But other players in the AI world are toying with the tech, 404 Media noted, including Clearview AI, a company behind a face search engine for cops that "has also explored a pair of smart glasses that would run its facial recognition technology." (That's concerning for several reasons: because Clearview's goal is to put almost every human in their facial recognition database, cops have already unethically used the tool without authorization to conduct personal searches, and Clearview has already been fined $33 million for privacy violations.)

Confronting this emerging threat doesn't seem to take much effort for now, thankfully. In their Google document, Nguyen and Ardayfio provide instructions for people to remove their faces from reverse face search engines like PimEyes and Facecheck ID, as well as people search engines like FastPeopleSearch, CheckThem, and Instant Checkmate. They also provided a form for people to reach out with questions.

Now that it's clear that publicly available tech can be used to make smart glasses see much more than even Big Tech companies intended, Ars used the form to ask if there was much interest from people hoping to replicate their creepy altered smart glasses but did not immediately receive a response.

In statements to 404 Media, both Meta and PimEyes seemed to downplay the privacy risks, the former noting that the same risks exist with photos of individuals and the latter claiming that PimEyes does not "identify" people (it only points to links to their photos where users can often find identifying information).

In the European Union, where collecting facial recognition data generally requires someone's direct consent under the General Data Protection Regulation, smart glasses like I-XRAY may not be as big of a concern for people who prefer to be anonymous in public spaces. But in the US, I-XRAY could be providing bad actors with their next scam.

"If people do run with this idea, I think that’s really bad," Ardayfio told 404 Media. "I would hope that awareness that we’ve spread on how to protect your data would outweigh any of the negative impacts this could have.”

YOURDEMOCRACY.NET RECORDS HISTORY AS IT SHOULD BE — NOT AS THE WESTERN MEDIA WRONGLY REPORTS IT.

- By Gus Leonisky at 22 Oct 2024 - 8:05am

- Gus Leonisky's blog

- Login or register to post comments

Recent comments

3 hours 46 min ago

3 hours 52 min ago

4 hours 4 min ago

4 hours 23 min ago

4 hours 29 min ago

7 hours 37 min ago

8 hours 36 min ago

8 hours 44 min ago

22 hours 36 min ago

1 day 3 hours ago