Search

Democracy Links

Member's Off-site Blogs

don't be afraid. artificial intelligence "machine learning" is here to work for you.......

MANY PEOPLE ARE AFRAID OF ARTIFICIAL INTELLIGENCE. IT'S UNDERSTANDASHFJIJLE.... YOU KNOW WHAT I MEAN. WE'RE CRAP AT SOLVING PROBLEMS DUE TO MISUNDERSTANDINGS, PREJUDICES AND IGNORANCE. OUR BRAIN RESISTS LEARNING THAT IS CONTRARY TO PREVIOUS ACCEPTANCE.

ENTER THE MACHINE LEARNING ALGORYTHMS.

The many-body problem is a general name for a vast category of physical problems pertaining to the properties of microscopic systems made of many interacting particles. Microscopic here implies that quantum mechanics has to be used to provide an accurate description of the system. Many can be anywhere from three to infinity (in the case of a practically infinite, homogeneous or periodic system, such as a crystal), although three- and four-body systems can be treated by specific means (respectively the Faddeev and Faddeev–Yakubovsky equations) and are thus sometimes separately classified as few-body systems.

In general terms, while the underlying physical laws that govern the motion of each individual particle may (or may not) be simple, the study of the collection of particles can be extremely complex. In such a quantum system, the repeated interactions between particles create quantum correlations, or entanglement. As a consequence, the wave function of the system is a complicated object holding a large amount of information, which usually makes exact or analytical calculations impractical or even impossible.

https://en.wikipedia.org/wiki/Many-body_problem

—————————

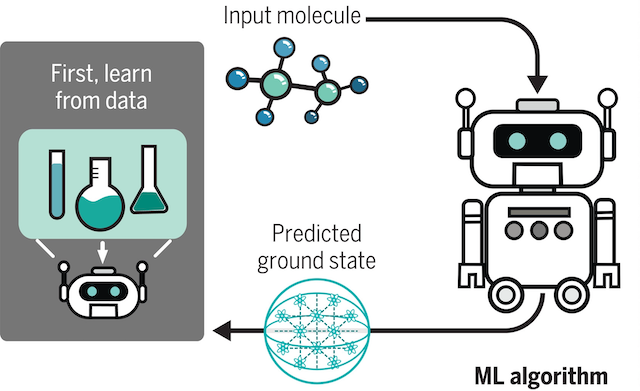

Learning many-body behavior

Predicting the properties of strongly interacting many-body quantum systems is notoriously difficult. One approach is to use quantum computers, but at the current stage of the technology, the most interesting problems are still out of reach. Huang et al. explored a different technique: using classical machine learning to learn from experimental data and then applying that knowledge to predict physical properties or classify phases of matter for specific types of many-body problems. The authors show that under certain conditions, the algorithm is computationally efficient. —JS

Structured Abstract

INTRODUCTION

Solving quantum many-body problems, such as finding ground states of quantum systems, has far-reaching consequences for physics, materials science, and chemistry. Classical computers have facilitated many profound advances in science and technology, but they often struggle to solve such problems. Scalable, fault-tolerant quantum computers will be able to solve a broad array of quantum problems but are unlikely to be available for years to come. Meanwhile, how can we best exploit our powerful classical computers to advance our understanding of complex quantum systems? Recently, classical machine learning (ML) techniques have been adapted to investigate problems in quantum many-body physics. So far, these approaches are mostly heuristic, reflecting the general paucity of rigorous theory in ML. Although they have been shown to be effective in some intermediate-size experiments, these methods are generally not backed by convincing theoretical arguments to ensure good performance.

RATIONALE

A central question is whether classical ML algorithms can provably outperform non-ML algorithms in challenging quantum many-body problems. We provide a concrete answer by devising and analyzing classical ML algorithms for predicting the properties of ground states of quantum systems. We prove that these ML algorithms can efficiently and accurately predict ground-state properties of gapped local Hamiltonians, after learning from data obtained by measuring other ground states in the same quantum phase of matter. Furthermore, under a widely accepted complexity-theoretic conjecture, we prove that no efficient classical algorithm that does not learn from data can achieve the same prediction guarantee. By generalizing from experimental data, ML algorithms can solve quantum many-body problems that could not be solved efficiently without access to experimental data.

RESULTS

We consider a family of gapped local quantum Hamiltonians, where the Hamiltonian H(x) depends smoothly on m parameters (denoted by x). The ML algorithm learns from a set of training data consisting of sampled values of x, each accompanied by a classical representation of the ground state of H(x). These training data could be obtained from either classical simulations or quantum experiments. During the prediction phase, the ML algorithm predicts a classical representation of ground states for Hamiltonians different from those in the training data; ground-state properties can then be estimated using the predicted classical representation. Specifically, our classical ML algorithm predicts expectation values of products of local observables in the ground state, with a small error when averaged over the value of x. The run time of the algorithm and the amount of training data required both scale polynomially in m and linearly in the size of the quantum system. Our proof of this result builds on recent developments in quantum information theory, computational learning theory, and condensed matter theory. Furthermore, under the widely accepted conjecture that nondeterministic polynomial-time (NP)–complete problems cannot be solved in randomized polynomial time, we prove that no polynomial-time classical algorithm that does not learn from data can match the prediction performance achieved by the ML algorithm.

In a related contribution using similar proof techniques, we show that classical ML algorithms can efficiently learn how to classify quantum phases of matter. In this scenario, the training data consist of classical representations of quantum states, where each state carries a label indicating whether it belongs to phase A or phase B. The ML algorithm then predicts the phase label for quantum states that were not encountered during training. The classical ML algorithm not only classifies phases accurately, but also constructs an explicit classifying function. Numerical experiments verify that our proposed ML algorithms work well in a variety of scenarios, including Rydberg atom systems, two-dimensional random Heisenberg models, symmetry-protected topological phases, and topologically ordered phases.

CONCLUSION

We have rigorously established that classical ML algorithms, informed by data collected in physical experiments, can effectively address some quantum many-body problems. These rigorous results boost our hopes that classical ML trained on experimental data can solve practical problems in chemistry and materials science that would be too hard to solve using classical processing alone. Our arguments build on the concept of a succinct classical representation of quantum states derived from randomized Pauli measurements. Although some quantum devices lack the local control needed to perform such measurements, we expect that other classical representations could be exploited by classical ML with similarly powerful results. How can we make use of accessible measurement data to predict properties reliably? Answering such questions will expand the reach of near-term quantum platforms.

https://www.science.org/doi/10.1126/science.abk3333

FREE JULIAN ASSANGE-EINSTEIN NOW...............

- By Gus Leonisky at 18 Nov 2022 - 8:03am

- Gus Leonisky's blog

- Login or register to post comments

Recent comments

7 hours 53 min ago

13 hours 44 min ago

14 hours 42 min ago

14 hours 50 min ago

14 hours 58 min ago

17 hours 22 min ago

18 hours 44 min ago

20 hours 35 min ago

1 day 14 hours ago

1 day 16 hours ago