Search

Recent comments

- trump's law.....

4 sec ago - confiscation....

1 hour 3 min ago - mocking....

2 hours 9 min ago - never met....

3 hours 8 min ago - "CIA"....

10 hours 44 min ago - provoked....

10 hours 52 min ago - war footing....

11 hours 1 min ago - vote....

11 hours 16 min ago - don't relax....

12 hours 1 min ago - 1939–1945....

12 hours 44 min ago

Democracy Links

Member's Off-site Blogs

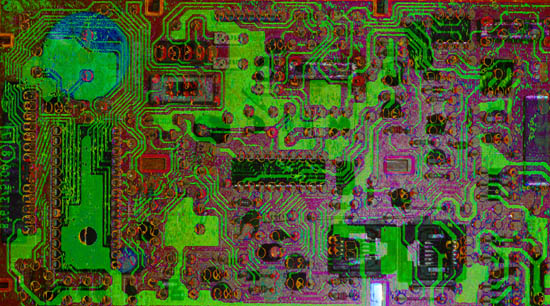

performance boost from loopiness...

Silicon chips that are allowed to make mistakes could help ensure computers continue to get more powerful, say US researchers.

As components shrink, chip makers struggle to get more performance out of them while meeting power needs.

Research suggests relaxing the rules governing how they work and when they work correctly could mean they use less power but get a performance boost.

Special software is also needed to cope with the error-laden chips.

------------------------

Amazing... Suddenly the computer world is catching up with Gus... For years I have advocated the insertion of uncertainty and error in computers, in order to mimic the way the human brain works.

It has been my forte to use mistakes, uncertainty and accidental events to create much of my work. It does not mean automatic failure but it means very quick and often rewarding lateral solutions to sticky problems that would not be solved by the traditional direct route or would be too laborious. It can be spooky. It also eliminate the farting-around time of having to know perfectly what to do before doing it, especially in complex situations. And I mean it. It allows me to perform many totally different activities that demand different mind sets, in quick back and forth successions within seconds. It could be akin to multi-tasking.

I can't remember where on this site I have burried the notion of giving computers the power of "making mistakes" in order for them to spin out of a problem faster, but it's definitely here. Meanwhile, I give you the spinning lemons again...

But then again, one has to be careful that the mistakes are not terminal... I had this computer once, a 386 PC that had a bad mistake-making motherboard... and it was not funny. It did not know "how to get out of a hole"... It froze like a roo caught in headlights. That's where the programming has to be smart and know how to deal with the hardware uncertainty... Big paradigm shift.

- By Gus Leonisky at 26 May 2010 - 2:24pm

- Gus Leonisky's blog

- Login or register to post comments

DNA-computer...

A top UK scientist who helped sequence the human genome has said efforts to patent the first synthetic life form would give its creator a monopoly on a range of genetic engineering.

Professor John Sulston said it would inhibit important research.

US-based Dr Craig Venter led the artificial life form research, details of which were published last week.

Prof Sulston and Dr Venter clashed over intellectual property when they raced to sequence the genome in 2000.

Craig Venter led a private sector effort which was to have seen charges for access to the information. John Sulston was part of a government and charity-backed effort to make the genome freely available to all scientists.

"The confrontation 10 years ago was about data release," Professor Sulston said.

"We said that this was the human genome and it should be in the public domain. And I'm extremely glad we managed to pull this out of the bag."

------------------

May be I should claim intellectual property of the "computer chip designed to make errors" (see top comment) so a computer can become more powerful... (I formulated the concept in the 1980s when my 386 PC was going down the tube every five minutes). But then, I place a lot of my ideas in the public domain — including the paradox that our individual consciousness is greater than that of the entire universe... People will accept this concept eventually...

going algonuts...

If you were expecting some kind warning when computers finally get smarter than us, then think again.

There will be no soothing HAL 9000-type voice informing us that our human services are now surplus to requirements.

In reality, our electronic overlords are already taking control, and they are doing it in a far more subtle way than science fiction would have us believe.

Their weapon of choice - the algorithm.

Behind every smart web service is some even smarter web code. From the web retailers - calculating what books and films we might be interested in, to Facebook's friend finding and imaging tagging services, to the search engines that guide us around the net.

It is these invisible computations that increasingly control how we interact with our electronic world.

At last month's TEDGlobal conference, algorithm expert Kevin Slavin delivered one of the tech show's most "sit up and take notice" speeches where he warned that the "maths that computers use to decide stuff" was infiltrating every aspect of our lives.

Among the examples he cited were a robo-cleaner that maps out the best way to do housework, and the online trading algorithms that are increasingly controlling Wall Street.

"We are writing these things that we can no longer read," warned Mr Slavin.

http://www.bbc.co.uk/news/technology-14306146

------------------------

see toon at top...

vale dennis...

Dennis M. Ritchie, who helped shape the modern digital era by creating software tools that power things as diverse as search engines like Google and smartphones, was found dead on Wednesday at his home in Berkeley Heights, N.J. He was 70.

...

The C programming language, a shorthand of words, numbers and punctuation, is still widely used today, and successors like C++ and Java build on the ideas, rules and grammar that Mr. Ritchie designed. The Unix operating system has similarly had a rich and enduring impact. Its free, open-source variant, Linux, powers many of the world’s data centers, like those at Google and Amazon, and its technology serves as the foundation of operating systems, like Apple’s iOS, in consumer computing devices.

http://www.nytimes.com/2011/10/14/technology/dennis-ritchie-programming-trailblazer-dies-at-70.html?_r=1&src=ISMR_HP_LO_MST_FB

robots rule the roost...

Robot traders are dominating stock markets using high speed computer algorithms. Human traders and government regulators can’t keep up, and markets could be one programming glitch away from the next big crash.

Stan Correy investigates.

http://www.abc.net.au/radionational/programs/backgroundbriefing/2012-09-09/4242538?WT.svl=news5