Search

Democracy Links

Member's Off-site Blogs

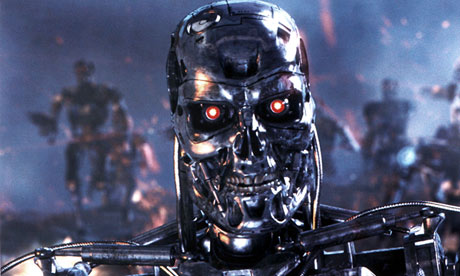

death wish ....

A U.N. report released earlier this week called for a global moratorium on developing highly sophisticated robots that can select and kill targets without a human being directly issuing a command. These machines, known as Lethal Autonomous Robots (LARs), may sound like science fiction - but experts increasingly believe some version of them could be created in the near future. The report, released by Professor Chrisof Heyns, U.N. Special Rapporteur on extrajudicial, summary or arbitrary executions, also calls for the creation of "a high level panel on LARs to articulate a policy for the international community on the issue."

The U.S. Department of Defense issued a directive on the subject last year, which the U.N. report says "bans the development and fielding of LARs unless certain procedures are followed" - although DoD officials have called the directive "flexible."

Unlike groups like Human Rights Watch - which has called for an all-out ban on LARs - the U.N. report suggests a pause on their development and deployment, while acknowledging the uncertainty of future technologies. "The danger is we are going to realize one day we have passed the point of no return," Heyns tells Rolling Stone. "It is very difficult to get states to abandon weaponry once developed, especially when it is so sophisticated and offers so many military advantages. I am not necessarily saying LARs should never be used, but I think we need to understand it much better before we cross that threshold, and we must make sure that humans retain meaningful control over life and death decisions."

Others who follow the subject echo these concerns. "I believe [LARs are] a paradigm shift because it fundamentally changes the requirements for human responsibility in making decisions to kill," says Peter Asaro, co-founder and vice chair of the International Committee for Robot Arms Control. "As such, it threatens to create automated systems that could deny us of our basic human rights, without human supervision or oversight."

What does it mean for a technology to be autonomous? Missy Cummings, a technologist at MIT, has defined this quality as the ability "to reason in the presence of uncertainty." But robot autonomy is a spectrum, not a switch, and one that for now will likely develop piecemeal. On one end of the spectrum are machines with a human "in the loop" - that is, the human being, not the robot, makes the direct decision to pull the trigger. (This is what we see in today's drone technology.) On the other end is full autonomy, with humans "out of the loop," in which LARs make the decision to kill entirely on their own, according to how they have been programmed. Since computers can process large amounts of data much faster than humans, proponents argue that LARs with humans "out of the loop" will provide a tactical advantage in battle situations where seconds could be the difference between life and death. Those who argue against LARs say the slowdown added by having a human "in the loop" vastly outweighs the dangerous consequences that could arise from unleashing this technology.

Because LARs don't yet exist, the discussion around them remains largely hypothetical. Could a robot distinguish between a civilian and an insurgent? Could it do so better than a human soldier? Could a robot show mercy - that is, even if a target were "legitimate," could it decide not to kill? Could a robot refuse an order? If a robot acting on its own kills the wrong person, who is held responsible?

Supporters argue that using LARs could have a humanitarian upside. Ronald Arkin, a roboticist and roboethicist at Georgia Tech who has received funding from the Department of Defense, is in favor of the moratorium, but is optimistic in the longterm. "Bottom line is that protection of civilian populations is paramount with the advent of these new systems," he says. "And it is my belief that if this technology is done correctly, it can potentially lead to a reduction in non-combatant casualties when compared to traditional human war fighters."

In a recent paper, law professors Kenneth Anderson and Matthew Waxman suggest that robots would be free from "human-soldier failings that are so often exacerbated by fear, panic, vengeance, or other emotions - not to mention the limits of human senses and cognition."

Still, many concerns remain. These systems, if used, would be required to conform to international law. If LARs couldn't follow rules of distinction and proportionality - that is, determine correct targets and minimize civilian casualties, among other requirements - then the country or group using them would be committing war crimes. And even if these robots were programmed to follow the law, it is entirely possible that they could remain undesirable for a host of other reasons. They could potentially lower the threshold for entering into a conflict. Their creation could spark an arms race that - because of their advantages - would become a feedback loop. The U.N. report describes the fear that "the increased precision and ability to strike anywhere in the world, even where no communication lines exist, suggests that LARs will be very attractive to those wishing to perform targeted killing."

The report also warns that "on the domestic front, LARs could be used by States to suppress domestic enemies and to terrorize the population at large." Beyond that, the report warns LARs could exacerbate the problems associated with the position that the entire world is a battlefield, one that - though the report doesn't say so explicitly - the United States has held since 9/11. "If current U.S. drone strike practices and policies are any example, unless reforms are introduced into domestic and international legal systems, the development and use of autonomous weapons is likely to lack the necessary transparency and accountability," says Sarah Knuckey, a human rights lawyer at New York University's law school who hosted an expert consultation for the U.N. report.

"There is widespread concern that allowing LARs to kill people may denigrate the value of life itself," the report concludes - even if LARs are able to meet standards of international law. It argues, eerily, that a moratorium on these weapons is urgently needed. "If left too long to its own devices, the matter will, quite literally, be taken out of human hands."

- By John Richardson at 3 May 2013 - 12:49am

- John Richardson's blog

- Login or register to post comments

Recent comments

6 hours 32 min ago

12 hours 23 min ago

13 hours 21 min ago

13 hours 29 min ago

13 hours 37 min ago

16 hours 1 min ago

17 hours 23 min ago

19 hours 14 min ago

1 day 13 hours ago

1 day 15 hours ago