Search

Recent comments

- journalism....

21 min 49 sec ago - day three.....

1 hour 5 min ago - lawful law?....

1 hour 25 min ago - insurance....

1 hour 32 min ago - terrorists....

1 hour 43 min ago - nukes?...

3 hours 50 min ago - rape....

4 hours 29 min ago - devastation.....

6 hours 41 min ago - bibi's dream....

8 hours 30 min ago - thus war....

12 hours 41 min ago

Democracy Links

Member's Off-site Blogs

uncertain future of the scientific community.....

My tween-age daughters make me proud in countless ways, but I am still adjusting to the fact that they are not bookworms. I’m pretty sure that two generations ago, they would have been more like I was: always with their nose in some volume, looking up only to cross the street or to guide a fork on their plates. But today, even in our book-crammed home, where their father is often in a cozy reading chair, their eyes are more likely to be glued to a screen.

My Students Use AI. So What?

Young people are reading less and relying on bots, but there are other ways to teach people how to think.

By John McWhorter

But then, as often as not, what I’m doing in that cozy chair these days is looking at my own screen.

In 1988, I read much of Anna Karenina on park benches in Washington Square. I’ll never forget when a person sitting next to me saw what I was reading and said, “Oh, look, Anna and Vronsky are over there!” So immersed was I in Tolstoy’s epic that I looked up and briefly expected to see them walking by.

Today, on that same park bench, I would most certainly be scrolling on my phone.

READ MORE:

https://www.theatlantic.com/ideas/archive/2025/10/ai-college-crisis-overblown/684642/

=====================

AAAS Statement on Government Shutdown

1 October 2025

by: Sudip Parikh

Shutting down the government is no way to unleash U.S. innovation. This act delays setting clear priorities for our nation’s research enterprise and amplifies uncertainty that has enveloped the scientific community. The U.S. House and Senate have already reaffirmed their bipartisan commitment to American science by rejecting proposed steep cuts to the federal R&D budget for fiscal year 2026 so far. Lawmakers must get back to the negotiating table to ensure speedy passage of appropriations bills with sustained R&D funding to protect national security, ensure global competitiveness, and improve human health.

https://www.aaas.org/news/aaas-statement-government-shutdown

=============

At futuristic meeting, AIs took the lead in producing and reviewing all the studies

Organizers aim to tune AI to help accelerate science

BYJEFFREY BRAINARD

Major scientific journals and conferences ban crediting an artificial intelligence (AI) program, such as ChatGPT, as an author or reviewer of a study. Computers can’t be held accountable, the thinking goes. But yesterday, a norm-breaking meeting turned that taboo on its head: All 48 papers presented, covering topics ranging from designer proteins to mental health, were required to list an AI as the lead author and be scrutinized by AIs acting as reviewers.

The virtual meeting, Agents4Science, was billed as the first to explore a theme that only a year ago might have seemed like science fiction: Can AIs take the lead in developing useful hypotheses, design and run relevant computations to test them, and write a paper summarizing the results? And can large language models, the type of AI that powers ChatGPT, then effectively vet the work?

The goal, organizers said, was to advance “the development of guidelines for responsible AI participation in science.” Ultimately, they hope a fuller embrace of AI could accelerate science—and ease the burden on peer reviewers facing a ballooning number of manuscripts submitted to journals and conferences.

But some researchers are fiercely critical of the conference’s very premise. “No human should mistake this for scholarship,” said Raffaele Ciriello of the University of Sydney, who studies digital innovation, in a statement released by the Science Media Centre before the meeting. “Science is not a factory that converts data into conclusions. It is a collective human enterprise grounded in interpretation, judgment, and critique. Treating research as a mechanistic pipeline … presumes that the process of inquiry is irrelevant so long as the outputs appear statistically valid.”

Still, a lead conference organizer, AI researcher James Zou of Stanford University, says it is important to take creative approaches to examining AI’s role in research. He notes that a growing number of scientists are using AI, although evidence suggests many are not disclosing this use, as journals and conferences typically require. “There’s still some stigma about using AI, and people are incentivized to hide or to minimize it,” Zou tells Science. The conference organizers wanted “to have this study in the open so that we can start to collect real data, to start to answer these important questions.”

The conference, which attracted 1800 registrants, took a tack opposite of that chosen by journals and conferences. The organizers had most of the 315 submitted papers reviewed and scored on a six-point scale by three popular large language models —GPT-5, Gemini 2.5 Pro, and Claude Sonnet 4—and averaged the results for each paper. (The models’ mean score varied from 2.3 to 4.2.) Next, humans were asked to review 80 papers that passed a threshold score, and organizers accepted 48, based on both the AI and human reviews. The papers’ topics spanned several disciplines including chemistry (a search for new catalysts that could reduce atmospheric carbon dioxide), medicine (new candidates for drugs to treat Alzheimer’s disease), and psychology (simulating stresses on astronauts during long space flights).

One accepted paper organizers highlighted was submitted by Sergey Ovchinnikov, a biologist at the Massachusetts Institute of Technology. His team asked advanced versions of ChatGPT to generate amino acid sequences that code for biologically active proteins with a structural feature called a four-helix bundle. Ovchinnikov wanted to test the ChatGPT variants (called o3, o4-mini, and o4-mini-high), which are known as reasoning models, because they are designed to solve problems step by step. (Less advanced versions, such as GPT-4.5, are more known for their ability to summarize large bodies of information.)

To Ovchinnikov’s surprise, ChatGPT produced gene sequences without further refinement of his team’s query. He and his human colleagues conducted further analyses and subjected two sequences produced by the models to lab testing, which confirmed that a protein derived from one had a four-helix bundle. Ovchinnikov called that result promising, given the easy availability of ChatGPT; currently, scientists typically use specialized software to design proteins. Still, the application of ChatGPT to this task needs refinement, Ovchinnikov found. Most of the sequences his team produced did not garner “high confidence” on a score predicting whether they would form the desired protein structure.

Data presented at the conference underscore that AIs can collaborate with, but not fully replace, their human partners on research projects. The organizers asked each set of human authors to report how much work AI or humans contributed in several key areas, including generating hypotheses, analyzing data, and writing the paper. AI did most of the hypothesis-generation labor (defined as more than 50%) in just 57% of submissions and 52% of accepted papers. But in about 90% of papers, AI played a big role in the writing, which may reflect that this task is less computationally demanding.

Some human authors who presented at the meeting praised aspects of the work performed by their AI partners, saying it enabled them to complete in just a few days tasks that usually take much longer, and eased interdisciplinary collaborations with scholars outside their fields. But they also pointed to AI’s drawbacks, including a tendency to misinterpret complex methods, write code that humans needed to debug, and fabricate irrelevant or nonexistent references in papers. The organizers used AI-powered software to inform some authors about those problematic citations.

Such surface-level checks by AI can improve papers, but scientists should remain skeptical about applying AI for tasks that require deep, conceptual reasoning and scientific judgment, according to Stanford computational astrophysicist Risa Wechsler, who reviewed some of the submitted papers.

“I’m genuinely excited about the use of AI for research, but I think that this conference usefully also demonstrates a lot of limitations,” she said during a panel discussion at the meeting. “I am not at all convinced that AI agents right now have the capability to design robust scientific questions that are actually pushing forward the forefront of the field.” One paper she reviewed “may have been technically correct but was neither interesting nor important,” she said. “One of the most important things we teach human scientists is how to have good scientific taste. And I don’t know how we will teach AI that.”

Effective automated assessment of scientific ideas may require a suite of AI agents working together, each consistently providing a critical perspective, said another panelist, James Evans, a computational social scientist at the University of Chicago who has studied human-machine interactions. Currently, however, existing large language models have instead shown a “sycophantic” tendency to produce outputs that favorably reflect a human request. “All of the main commercial [AIs] are just too nice,” Evans says. They are “not going to produce the level of conflict and diverse perspectives that are required for really pathbreaking work.”

The conference organizers plan to publish an analysis comparing the AI- and human-written reviews they received on each proposal. But their divergence was hinted at in the remarks garnered by Ovchinnikov’s protein-design paper. An AI reviewer called it “profound.” But a human deemed it “an interesting proof-of-concept study with some lingering questions.”

=================

YOURDEMOCRACY.NET RECORDS HISTORY AS IT SHOULD BE — NOT AS THE WESTERN MEDIA WRONGLY REPORTS IT — SINCE 2005.

Gus Leonisky

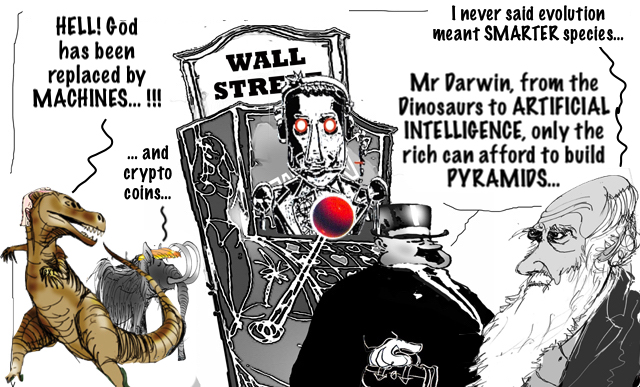

POLITICAL CARTOONIST SINCE 1951.

- By Gus Leonisky at 24 Oct 2025 - 7:49am

- Gus Leonisky's blog

- Login or register to post comments

afraid....

Hundreds of dignitaries across tech, academia, politics, and entertainment have signed a letter urging a ban on the development of so-called “superintelligence,” a form of AI that would surpass humans on essentially all cognitive tasks.

The group argues that the creation of superintelligent AI could trigger economic chaos, undermine human freedom, and even threaten human extinction if left unchecked. The call follows months of escalating warnings from experts who say existing AI models are advancing faster than regulators can keep up.

Among the 4,300 signatures as of Thursday are Apple cofounder Steve Wozniak, Virgin Group founder Richard Branson, media celebrities Kate Bush and Will.I.am, and tech “godfathers” such as Geoffrey Hinton and Yoshua Bengio.

The statement calls for the prohibition to be imposed until “there is broad scientific consensus that [the development] will be done safely and controllably,” as well as “strong public buy-in.”

Despite growing alarm over AI’s potential risks, global regulation remains patchy and inconsistent.

The European Union’s AI Act, the world’s first major attempt to govern the technology, seeks to categorize AI systems by risk level, from minimal to unacceptable. Yet critics say the framework, which could take years to implement fully, may be outdated by the time it comes into force.

READ MORE: AI could wipe out 100 million US jobs – Bernie Sanders reportOpenAI, Google DeepMind, Anthropic, and xAI are some of the big tech companies that are spending billions to train models that can think, plan, and code on their own. The US and China are positioning AI supremacy as a matter of national security and economic leadership.

https://www.rt.com/news/626880-superintelligent-ai-threat-humanity-ban/

GUSNOTE: METHINKS THAT THE WESTERN WORLD IS AFRAID TO LOSE ITS "SUPREMACY" TO OTHER "RACES" WHO COULD DEVELOP BETTER AI THAN THE USA...

READ FROM TOP.

YOURDEMOCRACY.NET RECORDS HISTORY AS IT SHOULD BE — NOT AS THE WESTERN MEDIA WRONGLY REPORTS IT — SINCE 2005.

Gus Leonisky

POLITICAL CARTOONIST SINCE 1951.

SEE ALL ARTICLES BY GUS ABOUT AI (INCLUDING SUPER AI) ON THIS SITE....